I notice what appears to be an interesting quirk.

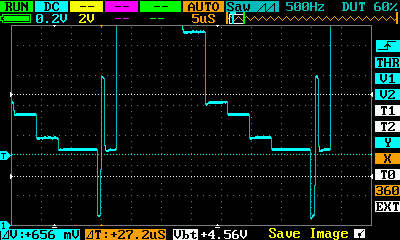

If I display the colour bar waveform at 0.2V/div, the top is clipped off:

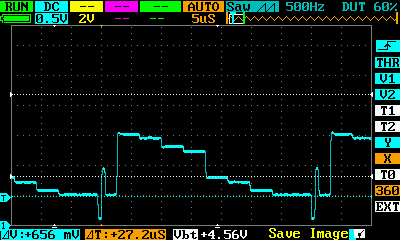

If I export that waveform to a CSV, it retains the clipping - no value in the CSV data goes above 200, where the bottom of the sync pulse is at around 8.

Am I doing something silly, or is the CSV output limited to what’s displayable, as opposed to what’s actually in the sample buffer?

Here’s the sample data for one line of colour bars, 0.2V/div, 5uS/div, track one only:

TRACK1 50mV,TRACK2 ,TRACK3,TRACK4,

008,095,060,010,

008,095,060,010,

008,095,060,010,

146,095,060,010,

148,095,060,010,

146,095,060,010,

145,095,060,010,

073,095,060,010,

072,095,060,010,

073,095,060,010,

073,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

073,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,097,060,010,

199,097,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,097,060,010,

199,097,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,097,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,097,060,010,

199,097,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,097,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,097,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

199,095,060,010,

192,095,060,010,

191,095,060,010,

191,095,060,010,

191,095,060,010,

191,097,060,010,

191,095,060,010,

191,095,060,010,

191,095,060,010,

191,095,060,010,

191,095,060,010,

191,095,060,010,

191,095,060,010,

191,097,060,010,

191,095,060,010,

191,095,060,010,

191,095,060,010,

191,095,060,010,

191,095,060,010,

191,095,060,010,

191,095,060,010,

192,095,060,010,

191,095,060,010,

114,095,060,010,

118,095,060,010,

120,095,060,010,

120,097,060,010,

120,095,060,010,

121,095,060,010,

120,095,060,010,

121,095,060,010,

121,095,060,010,

121,095,060,010,

121,095,060,010,

121,095,060,010,

121,095,060,010,

121,095,060,010,

121,095,060,010,

121,095,060,010,

121,095,060,010,

121,095,060,010,

121,095,060,010,

121,097,060,010,

121,095,060,010,

121,095,060,010,

114,095,060,010,

109,095,060,010,

109,095,060,010,

109,095,060,010,

109,095,060,010,

109,095,060,010,

109,095,060,010,

109,095,060,010,

109,095,060,010,

109,095,060,010,

109,095,060,010,

109,097,060,010,

109,095,060,010,

109,095,060,010,

108,095,060,010,

109,097,060,010,

109,095,060,010,

109,095,060,010,

109,095,060,010,

109,095,060,010,

109,095,060,010,

109,095,060,010,

109,097,060,010,

085,095,060,010,

085,095,060,010,

085,095,060,010,

085,095,060,010,

086,095,060,010,

085,095,060,010,

086,095,060,010,

086,095,060,010,

086,095,060,010,

085,095,060,010,

086,095,060,010,

085,095,060,010,

085,095,060,010,

086,095,060,010,

085,097,060,010,

086,095,060,010,

085,095,060,010,

085,095,060,010,

086,095,060,010,

086,095,060,010,

085,095,060,010,

085,095,060,010,

073,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

074,095,060,010,

010,095,060,010,

008,095,060,010,

008,095,060,010,

H

In the meantime, it’ll be only slightly slower than native C code would be.

In the meantime, it’ll be only slightly slower than native C code would be.