Hello,

We are trying to stream 2MBps stream to j4012 which is acting as RTSP client:

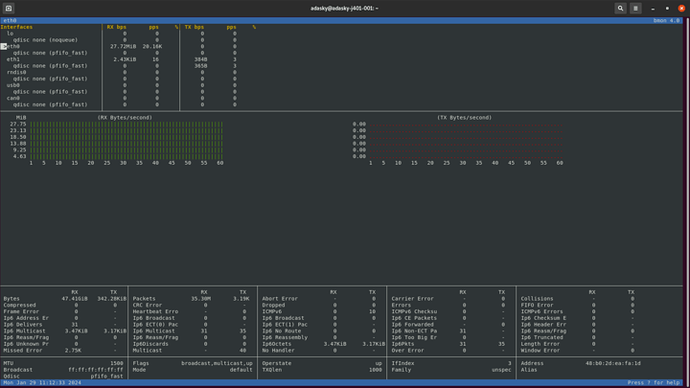

We receive many RX Drops and overflow errors.

No matter what ethernet port we select: eth0 or eth1

No matter if we have a switch between client and server or not.

We are using stock image jetpack5.1.1

What can be the source of the issue?

Thanks,

Oshi

We have made changes to buffer limits to support streaming:

net.ipv4.tcp_rmem = 4096 262144 16777216

net.ipv4.tcp_wmem = 4096 262144 16777216

net.core.netdev_max_backlog = 5000

net.core.wmem_max=12582912

net.core.rmem_max=12582912

net.ipv4.tcp_rmem= 10240 87380 12582912

net.ipv4.tcp_wmem= 10240 87380 12582912

Kernel Interface table

Iface MTU RX-OK RX-ERR RX-DRP RX-OVR TX-OK TX-ERR TX-DRP TX-OVR Flg

can0 16 0 0 0 0 0 0 0 0 ORU

eth0 1500 48029671 0 290 0 4392 0 0 0 BMRU

eth1 1500 238740 0 3603 0 4295 0 0 0 BMRU

lo 65536 1413 0 0 0 1413 0 0 0 LRU

rndis0 1500 0 0 0 0 0 0 0 0 BMU

usb0 1500 0 0 0 0 0 0 0 0 BMU

wlan0 1500 0 0 0 0 0 0 0 0 BMU

Ip:

Forwarding: 2

48876911 total packets received

11 with invalid addresses

0 forwarded

0 incoming packets discarded

48876719 incoming packets delivered

9829 requests sent out

15776 dropped because of missing route

14 reassemblies required

7 packets reassembled ok

Icmp:

1091 ICMP messages received

37 input ICMP message failed

ICMP input histogram:

destination unreachable: 11

echo requests: 931

echo replies: 112

1271 ICMP messages sent

0 ICMP messages failed

ICMP output histogram:

destination unreachable: 116

echo requests: 224

echo replies: 931

IcmpMsg:

InType0: 112

InType3: 11

InType8: 931

InType9: 37

OutType0: 931

OutType3: 116

OutType8: 224

Tcp:

169 active connection openings

3 passive connection openings

2 failed connection attempts

1 connection resets received

6 connections established

4979 segments received

6469 segments sent out

1 segments retransmitted

0 bad segments received

169 resets sent

Udp:

46181585 packets received

42863 packets to unknown port received

2536334 packet receive errors

2196 packets sent

2536334 receive buffer errors

0 send buffer errors

IgnoredMulti: 33103

UdpLite:

TcpExt:

145 TCP sockets finished time wait in fast timer

31 delayed acks sent

Quick ack mode was activated 1 times

608 packet headers predicted

433 acknowledgments not containing data payload received

1383 predicted acknowledgments

TCPSackRecovery: 1

1 fast retransmits

TCPDSACKOldSent: 1

7 connections reset due to unexpected data

TCPSackShiftFallback: 1

IPReversePathFilter: 26

TCPRcvCoalesce: 52

TCPAutoCorking: 176

TCPWantZeroWindowAdv: 1

TCPOrigDataSent: 3980

TCPDelivered: 4125

IpExt:

InMcastPkts: 133096

OutMcastPkts: 186

InBcastPkts: 33110

OutBcastPkts: 18

InOctets: 69546439932

OutOctets: 1054176

InMcastOctets: 29942300

OutMcastOctets: 78736

InBcastOctets: 8949598

OutBcastOctets: 1391

InNoECTPkts: 48876951