Hi everyone,

I’m working on a project using the Xiao Seeed Studio ESP32S3 Sense module and I’m trying to run a person detection model shared by SenseCraft AI. Despite following the setup instructions and examples provided, I am encountering issues with running the model due to memory constraints.

Here are the specifics of my setup:

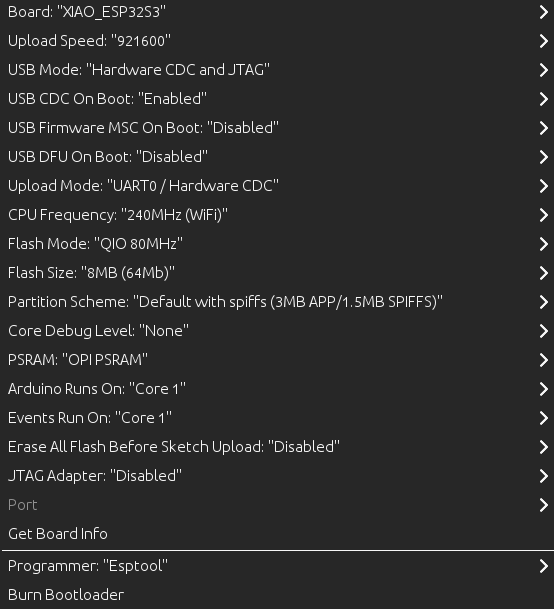

- Module: Xiao Seeed Studio ESP32S3 Sense

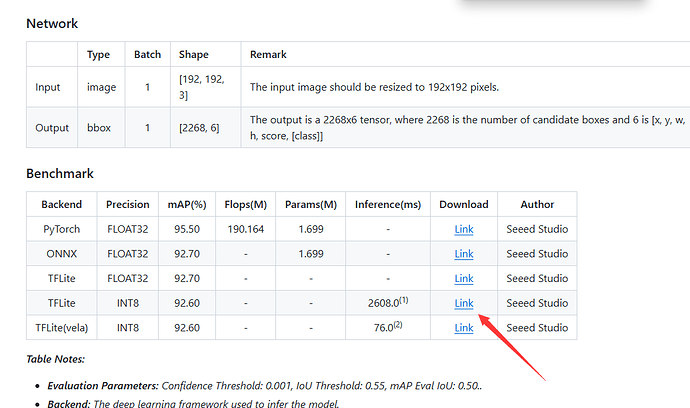

- Model: Person detection model from SenseCraft AI

- Environment: Arduino IDE

- Issue: I’m unable to fit the TensorFlow Lite model into the DRAM of the ESP32S3 module. The model is approximately 1.9 MB, and the module has 8 MB of PSRAM which I’m trying to utilize.

Here is a snippet of my code where I allocate memory for the tensor arena using ps_malloc to use PSRAM:

#include "esp_camera.h"

#include "FS.h"

#include "SD.h"

#include "SPI.h"

#include <TensorFlowLite_ESP32.h>

#include <tensorflow/lite/micro/all_ops_resolver.h>

#include <tensorflow/lite/micro/micro_error_reporter.h>

#include <tensorflow/lite/micro/micro_interpreter.h>

#include <tensorflow/lite/schema/schema_generated.h>

#include "model.h"

namespace {

constexpr int kTensorArenaSize = 2000000; // 2MB

static uint8_t* tensor_arena = nullptr;

}

void setup() {

Serial.begin(115200);

tensor_arena = (uint8_t*)ps_malloc(kTensorArenaSize);

if (tensor_arena == nullptr) {

Serial.println("Failed to allocate tensor arena from PSRAM");

return;

}

// Model setup and inference code

}

void loop() {

// Inference handling code

}

Despite this, I still face issues with DRAM overflow during compilation, and the inference process does not execute as expected.

Has anyone here worked with TensorFlow Lite on the ESP32S3 module, particularly with large models? Any advice on optimizing memory usage or debugging tips would be greatly appreciated. Also, if you have specific recommendations for handling large TensorFlow Lite models on constrained devices like ESP32S3, please share.

Thank you in advance for your help!