Hi BenF,

I’m commenting “Sat May 07, 2011 2:52 am”.

First of all, thank you a lot for your time and improvements on this. I’m using DSO Nano occasionally for developing and servicing some machines with analog and digital signals less then 100 kHz. The small size of DSO nano makes it great.

I had two main problems (I’m using 3.61 version):

-

In most cases, I’m examining signals about 50 Hz (for example, controlling thyristors) and use TD ~ 5ms. The “Normal” and “Single” trigger mode work OK. But, before I use these modes, I wish to see the signal “in general” with AUTO mode. On this TD, the refresh rate is too slow (I didn’t measured, but lets say 0.2 - 0.5 sec), so I cannot see all signal changes and miss something.

-

There could be occasional high frequency components on low frequency changes. It is hard to see this high frequency change (at least you cannot set trigger for it).

My main suggestions are:

-

To increase refresh rate on auto mode for bigger TD, to be able to see what is going on with signal.

-

Always sampling at 1MHz. Unfortunately, you din’t understand my idea. Sorry for my bad English.

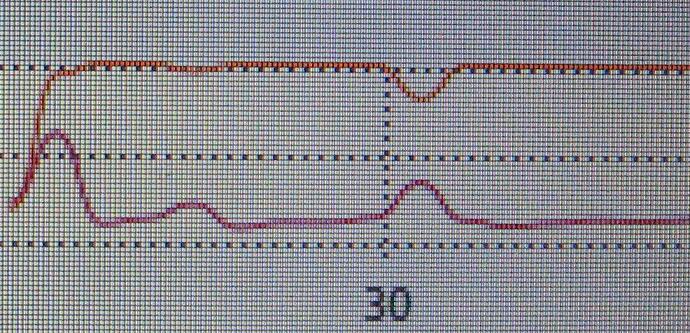

For example, let’s use TD=5ms. Currently, on Normal sampling mode you use fs=5kHz sampling and fill the buffer. You have 25 values per one time div. Because fs is only 5kHz, faster frequency changes (theoretically >= 2.5 kHz) looks very strange (or can be missed) and moved to low frequency. This is normal theoretical situation: if you sample 6kHz sine signal with 5kHz you will got 1kHz as result (I think?). Use my example in attachment and change the input frequency at filed B2 to: 100, 200, …, 1000, 2000, …, 6000 (the same as 1000).

My idea is following. For ONE pixel on screen (5ms / 25 = 20us), capture 20us / (1/1Mhz) = 200 samples. Calculate Average value, Min value and Max value for this set of 200 samples (you need just three variables), and put all three values in buffer and plot them on screen. You can use Average value as you did before to draw lines, while Min and Max value can draw vertical line with lower color intensity, or just plot two dots.

On that way we can DETECT and see high frequency changes amplitude even on low frequency signals. For example, if there is very small impulse with dt = 5us, we can miss it or display it occasionally. With min max values, we will always see this signal because we sample with dt = 1us (we will catch it 5 times).

For trigger modes, need to be option to catch only Average, or all three values. Or just use average value to simplify this.

This will reduce buffer size by factor 2 or 3 depending do you store Min and Max with 4 or 8 bits. Probably it is the bast just to store each value with 8 bits and simplify things and have real picture of signals, instead my idea I suggest in my second email (Min and Max with 4 bit none linear difference to average).

There could be option to enable or disable this mode.

–

Related to the Normal / Fast sampling modes, I have strange picture and the level of these signal are different. I expected that level on signal with high frequency can be higher (if it catch some high freq value), but I got opposite (I think, not sure now).

Anyway, with increasing refresh rate and sampling additional Min and Max values, we do not not need fast mode.

Thanks and regards,

Dejan

sine at 5kHz sampling.zip (38.2 KB)