[size=200]Daft Punk Helmet Project [/size]

by ThreeFN

[size=150]The History:[/size]

This project began back in October of 2006 as a challenge by a friend. After I had shown him the film Interstella 5555, one of Daft Punks signature films, he challenged me to make a replica Guy-Manuel de Homem-Christo helmet, including a full face “screen” made from LEDs from some of the duos Japan interviews. Since that day this project has been a minor, and sometimes major, obsession of mine.

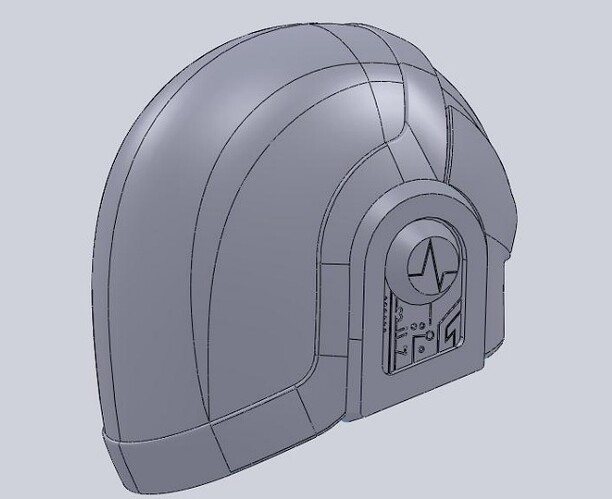

When I began to spec the project, the original resolution was 15x40 RGY LEDs. This somehow morphed into 16x32 RGB LEDs, and finally into 16x24 RGB after more detailed measurements and a few iterations of a solid model mock up.

The project largely waddled in purgatory for years. I wrote many design documents, mostly as sketches in power points, thinking about the different ways the project could be completed. I had a little programming experience with ADA95, an all but forgotten language. I also had no microcontroller experience to speak of. A friend at university was largely my source of technical uC knowledge, and I’m sure I drove him nuts with all of my questions.

I started with the idea of using the MAX7965, however no one had managed to accomplish RGB, and over the past 3 years I have heard of only one or two more that have tried. Most discovered timing problems that gave abysmal frame rates. Next I was drawn to the Sparkfun LED backpacks, since they provided and integrated solution, but it didn’t seem particularly applicable. At that point I had accepted that I would have to custom build my own LED screen out of individually wired LEDs, and the Sparkfun backpack’s cost and size/shape was becoming more of a hindrance as I got deeper into the design. I also wasn’t sure how well it would play with the LEDs I would eventually use, as it was very much designed for the LED matrix unit it ships with and didn’t look particularly friendly to a custom hack job.

As I was coming to the conclusion that I would have to make my own controller boards, most likely a cloned but smaller version of the Sparkfun backpack, and was within days of downloading Eagle and giving it a shot, I found the Rainbowduino. It was like the stars had aligned. I had a little knowledge of the arduino language at this point from an unrelated RFID door unlocker project, so I wouldn’t have to jump into the programming totally blind. The rainbowduino also fixed a lot of the problems I would have had with the Sparkfun backpack or my DIY clone. The drive current is adjustable, the pin out is logically grouped by color (not the case with the sparkfun) and it was daisy chainable right out of the box. And it was cheaper, even when I bought the prebuilt LED matrices to prototype with. Within hours I had ordered 6 of them and I began the long and extremely trying task of rewriting the firmware.

[size=150]Goal & Purpose:[/size]

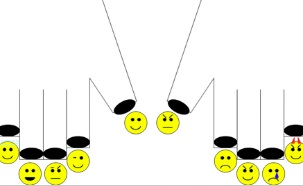

Part of my goal with this project is I want it to be an “immersive experience” for people seeing the complete costume and helmet. I don’t want it to simply look cool, running static loops of animations like something from the Las Vegas strip. I want it to respond and interact in a cool way. Ideally, I would be able to have a simple conversation with someone using only images and responses shown on the display. I would like to really look, and act, like a robot, with no voice and just a display for interaction with viewers. It’s all about suspension of disbelief.

The best way to explain my approach to this project and the method to my madness is a perpetual need to “one-up.” Sometimes this means going further than what has already been done, like doing RGB instead of Daft Punks RGY. Sometimes this means adding things that I thought would be cool way back when, such as my virtual eye OSD system. Sometimes this means going as far as my skills and available hardware will let me go, such as my spectrum analyzer animation. The spectrum analyzer was definitely in the “when pigs fly” category way back when I first started in 2006. And now it’s been implemented. Suffice it to say, I’m a particularly driven individual on this project.

This project has been boiling for so long in the back of my head that it’s a mild obsession. I don’t think I will ever be truly happy or truly finished. I will always find new and exciting ideas that I want to implement. The need to one-up always seems to put my project in the realm of just out of reach, and yet I am continuously able to proceed in a forward direction, and that is part of why I continue to increase the complexity of this project, because I continue to surprise myself and accomplish what I think can’t be done.

[size=150]The Work:[/size]

There were many problems that had to be overcome, most of which I had figured out before even ordering the rainbows. The default firmware had done a lot of the heavy lifting, low level, shift out LED control for me, so I could instead focus on extending the command and communication levels of the software. I decided to stick with the I2C communication bus for a few reasons: it was already populated with up and downstream connectors, it required the minimum number of bus wires, and it was the architecture I wanted with a single master and multiple addressable slaves on one bus.

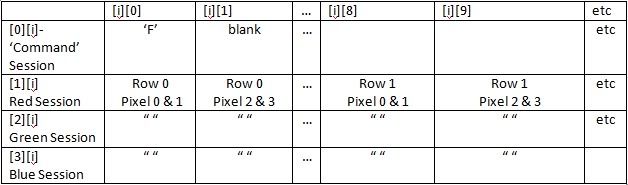

The default commands were a good starting point, but since my screen wasn’t single row “billboard” style and I wanted to do complex animations calculated on the master arduino, I needed the ability to send color data for all pixels all at once as part of a single command. This meant a minimum of 96 bytes of data if I wanted the full 4 bits per color, and of course I wanted it. I immediately decided to do the I2C bus speed hack that boosts the arduino’s default 100KHz speed up to 400KHz. The arduino I2C bus buffer is only 32 bytes, so I implemented a simple handshake routine where the master status callback would now return the number of the next desired block of data. Theoretically this would improve error handling though in practice I’ve never seen issues that weren’t related to a deliberately interrupted block from a reprogramming of the master.

After I had implemented the ability to send a “full frame” of color data that could be written one-for-one to the rainbows display buffer, I began doing my early animations and static images. I quickly realized that complex animations that took considerable computation time tanked on frame rate due to the relatively long transmission time of 3 blocks of data at 32 bytes per block. I did some optimization of the code but the frame rate was still not quite sufficient in my opinion. I also realized that a lot of my static images contained three or fewer colors and those were taking up a lot of my PROGMEM space when they each had 3326=576 bytes per “screen.” I had only programmed ten static images before I had run out of the 14K of flash I had on my Arduino 168. This meant I needed a new data structure and command set that was more efficient.

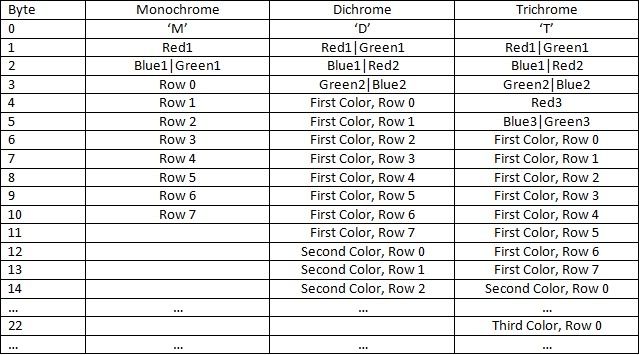

To fix my space/frame rate problem, I made three limited color command/data types: monochrome, dichrome, and trichrome. As the names imply, each one is prefaced with one, two, or three color values much like the base command set. This is followed by sets of 8 bytes, one for each color. The 8 bytes contain on/off bits for each led, row by row, left to right. This greatly reduced the memory footprint of many of my static images, and it meant that animations could be more complex with very good frame rates so long as they used a reduced color set. This was especially helpful with my Conway’s Game of Life animation. My frame rates running a single color loop with very little master side calculation (close to the maximum possible) look something like this:

Full RGB: ~24fps

Trichrome: ~80fps

Dicrhome: ~110fps

Monochrome: >160fps

The main reason for the incredibly high frame rates is that the chromatic commands can all fit in the first block of transmission (<32 bytes). Most transmission delay is due to the delay required after the Wire.endTransmission when the buffer is emptied and sent on the bus. Even at only 250us this delay adds up quickly with 6 rainbows, and it is present no matter how big the transmission data amount.

The firmware has largely remained unchanged since the implementation of the chromatics. I’ve been meaning to put a few two dedicated random white noise animations directing into the firmware, but haven’t gotten around to it after having a function problem. I’m sure I could add quadchrome up to the Nth degree, but having trichrome whittled down most of my flash memory outlay, and that was its primary purpose. The increased frame rate was just a very nice bonus.

I’ve also thought about adding my own ASCII character system to the firmware, but with the chromatics already programmed and having very good frame rates, I’m finding very little need to do things on the slave side rather than the master side.

[size=150]The How-To:[/size]

There isn’t much to say on how to get to the same place I am. OK, that’s not true, I wrote a whole manual document which you will find along with the code. I’ve copied the comments included in the code below for some starting information:

//=====================================================================

//Rainbowduino Seeedmaster V2

//Originally provided by Seeed Studio

//Modified by Scott C.

//=====================================================================

//=====================================================================

//This is master-side code that contains some prebuilt functions for

//sending commands to a Rainbowduino running V2.7 or later

//The original commands have not been changed, so this code

//could theoretically send commands to V2, but only ‘R’ commands. Both

//the master and slave code have been modified to support multiple data

//transmission session over the IIC communications bus, due to the Wire

//Library limitation of only 32 bytes per transmission. This is most

//evident in changes to the function SendCMD.

//

//In addition a few new command types have been added. A command

//starting with ‘F’ sends color data for each of the 64 leds set up

//similiar to the buffer on the Rainbowduino (byte per two leds). This

//allows for complex animations to be calculated on the master, and

//then the “frame” of data for all leds to be sent to the slave

//Rainbowduino. This is particularly helpful for animations involving

//more than one Rainbowduino comprising a “screen.”

//

//There are also three other command types dubbed “chromatics.”

//Monochromatic (one color), dichromatic (two), and trichromatic

//(three) frames can be sent with commands starting with ‘M’,‘D’, and

//‘T’ respectively. Each of these commands consists of the relavent

//number of RGB values, followed by 8 bytes per unique color. Each

//byte in one of these sets represents a line on the display, 1 meaning

//the led is on, 0 off. These commands are much smaller, and transmit

//in the first transmission session so they are much faster, especially

//important for large screens with many Rainbowduinos.

//

//It is highly recommended for ‘F’ commands and large screens to modify

//the Wire library for the faster 400KHz transmission.

//Info on how to do this can be found here:

//http://www.arduino.cc/cgi-bin/yabb2/YaBB.pl?num=1241668644/0

//

//VERY BIG NOTE: The functions outlined below, specifically ShowFrame,

//can very quickly eat up your available SRAM. I developed this on the

//older 168 chip, and kept a very keen eye for minimizing SRAM usage

//with unsigned char datatypes whereever possible. However, a single

//input array of the kind in ShowFrame is 96 bytes, 1/10th of the 1K

//SRAM is gone right there. the 168 has made way for the 328, but I

//recommend becoming familiar with PROGMEM and rewrite your functions

//to extract data from flash memory, rather than defining the data like

//normal variables. Not having enough SRAM shows up as very bizarre

//errors that are difficult to track down and fix, and you will not

//be notified at compile time. Moving images and data to PROGMEM

//allows you to see how much storage you are using at compile time,

//since PROGMEM variables are stored in flash and become part of the

//sketch size in flash memory. Overstepping the available flash memory

//will throw an error when compiled/uploaded.

//

//The screen I rewrote this code for is 6 Rainbowduinos, and the master

//stores over 30 images using chromatics and a handful of full RGB

//images and is only 7.5K in flash memory. Some examples of this can

//be found by searching Youtube for user ThreeFN.

//======================================================================

[size=150]The Rest of the Project:[/size]

There are now so many parts to this project, it can be really tricky to keep it all straight in my head, let alone be able to explain it. I will try and break it down into its logical parts as best I can.

The Display: the backbone of this project. Consisting of the 6 rainbowduinos that will drive a custom built 16x24 RGB LED matrix that will be hand wired.

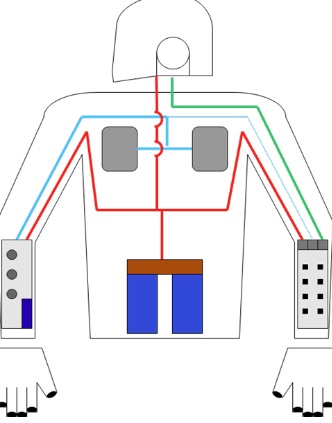

The Virtual Eye System: The VES is a combination of a small CMOS camera, an on screen display (OSD) chip, and set of video glasses. Since I won’t be able to see through the LED screen, I needed a camera/goggle system to supplement my vision. I one-upped this requirement by adding the OSD that will be used as to give status information. Early testing on this stuff has been completed, and I say that there are a lot of wires needed for this, since each part has its own video and power connection requirement.

The Interface: with the addition of the VES OSD the interface actually became a lot simpler. I had long planned for a set of armlet control panels with buttons on them to give input to the system. These were used by Daft Punk in their system, but the lack of vision would hamper the use of traditional buttons due to limited vision. I thought of a method to get around this problem by using buttons placed in the fingertips of the plated ‘robot’ gloves I would be wearing. With these button gloves and an on screen menu system, it could be very easy and innocuous to manipulate the display. I’m looking into methods other than push buttons right now, such as bend resistors or hall effect sensors so that the finger tips can be smaller and require less pressure/pain to push.

he Power System: I’m planning on 5 hours of un-tethered run time. This means I will probably run the whole system off of an external laptop battery, which will plug into a power distribution and monitoring system. I have a particularly nifty idea to also have the power supply for the battery as part of the system, so that I can plug into the wall outlets on the fly through a plug “tail,” maintaining the suspension of reality and the immersive-ness of the costume.

The Under Suit: I’d like to be able to wear a variety of different exterior costumes with the helmet to suit the mood and to change things up from Daft Punks signature leather outfits. To allow for different exterior costumes but still keep the cables, connections, and components manageable I’ve been working on an electronics under suit that will be worn under whatever costume I decide to wear that day. This not only gives something to sew cables and connections to permanently, it also gives not to use a backpack to contain all of the parts I’m going to need to carry around. I think it would add a lot if I didn’t need a backpack; certainly it would add to the one-up factor and a clean appearance. Practicality may win out and require a backpack, but I’m still very much try to keep components flat and small so that they can be put in pockets sewn into the under suit.

[size=150]The Project Now:[/size]

The current problem that occupies my thoughts is manufacturing the helmet. My company machinist has become very interested in the project and has offered machine time and assistance in making my molds, simplifying my work tremendously. I will continue to write all of the code as yet unwritten of course. The virtual eye and information management system is next on that list.

I continue to move forward, but it all seems to go in slow motion. It’s taken me years to get to this point, and it will certainly take months for me to finish. My highest hopes are for a debut during the US convention season next spring/summer. I’m hoping to debut at a big con, like Comic Con or Dragon Con.

[size=150]Additional Media:[/size]

I have made a few videos of my project, which were recently reposted to Vimeo for the competition. You can find these videos on Vimeo or on Youtube under the username ThreeFN.

http://www.youtube.com/user/ThreeFN

I am also post regular progress on The Daft Club Forums under the same user name:

http://www.thedaftclub.com/showthread.php?t=1334&page=287

Source code download

Rainbow_Command_Beta2.zip (20.7 KB)