Interesting. Ok, I’ll give this a go again today. Maybe I did something wrong.

Now that’s surprising as that’s different from what it shows up on Mac (just shows up as “XIAO_ESP32S3”, which is not as elegant a name, lol).

I think I may have had it commented out near the top in the .ino I sent you, but did you manage to get the #define MANUFACTURER_NAME thing to do anything?

Kind of. I do a lot of (low latency) machine learning stuff for drums and have designed some custom sensor stuff for the drums themselves. Trying to do something similar for an acoustic cymbal too. Since a cymbal physically moves a lot while being played (unlike a drum), it seemed interesting to try to capture some of that movement as controller data.

I still can’t attach pics to show the testing setup, but on the underside of the cymbal, near where it is mounted, I have a contact microphone and a 6DOF IMU mounted.

This is my initial proof-of-concept for the IMU stuff. In reality it would be more interesting mapping but just to show how fast/sensitive the IMU is in this context.

Every time the cymbal is hit it triggers a burst of noise, and the movement of the cymbal controls a filter and a phasor on the noise.

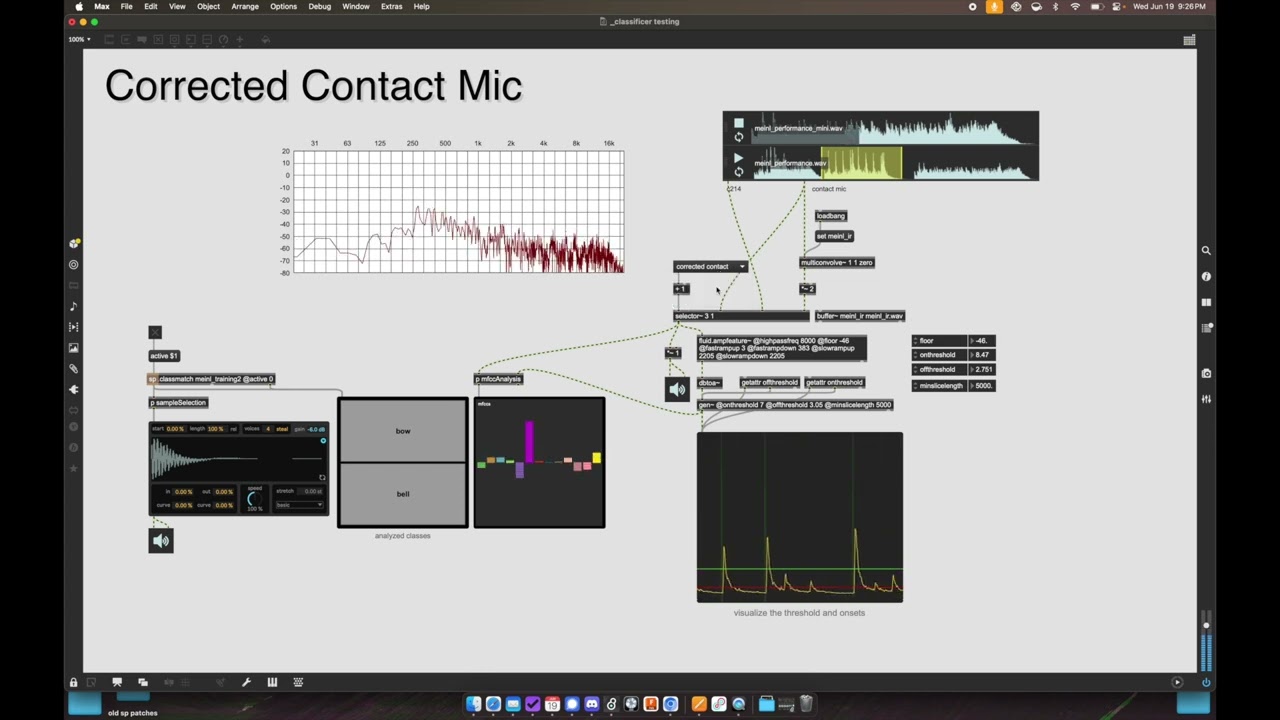

Less relevant to the ESP32 code, but this is a proof-of-concept test of the audio from the contact microphone. So I’m comparing a real/good microphone picking up the acoustic sound of the cymbal vs the contact microphone in a less-than-ideal position on the cymbal (typically a really bad idea). Then doing some impulse response microphone correction to “compensate” for the difference between the two. Then finally doing some classification to determine (just from the audio analysis) whether the cymbal is being struck on the body, or the bell area: